Hadoop大数据平台

Hadoop概述

Hadoop是一款实现分布式存储和分布式计算大数据计算平台。

大数据有所谓的4V特征。

Volume:存储量和计算量都很大。Variety:来源多,格式杂。Velocity:数据增长速度快,处理速度要求快。Value:价值密度低,和数据总量的大小成反比。

Hadoop目前存在几种不同的发行版。

Apache Hadoop:官方原生版本,开源,但部署运维很不方便。

Cloudera Hadoop(CDH):商业版本,对方官方做了优化,提供收费技术支持,提供界面操作,运维管理比较方便。

HortonWorks(HDP):开源,提供界面操作,运维管理比较,但已被Cloudera收购。

Cloudera Hadoop(CDH)和HortonWorks(HDP)都可以通过搜索引擎找到安装包的下载地址,相较于官方原生版本,用户体验会好很多。

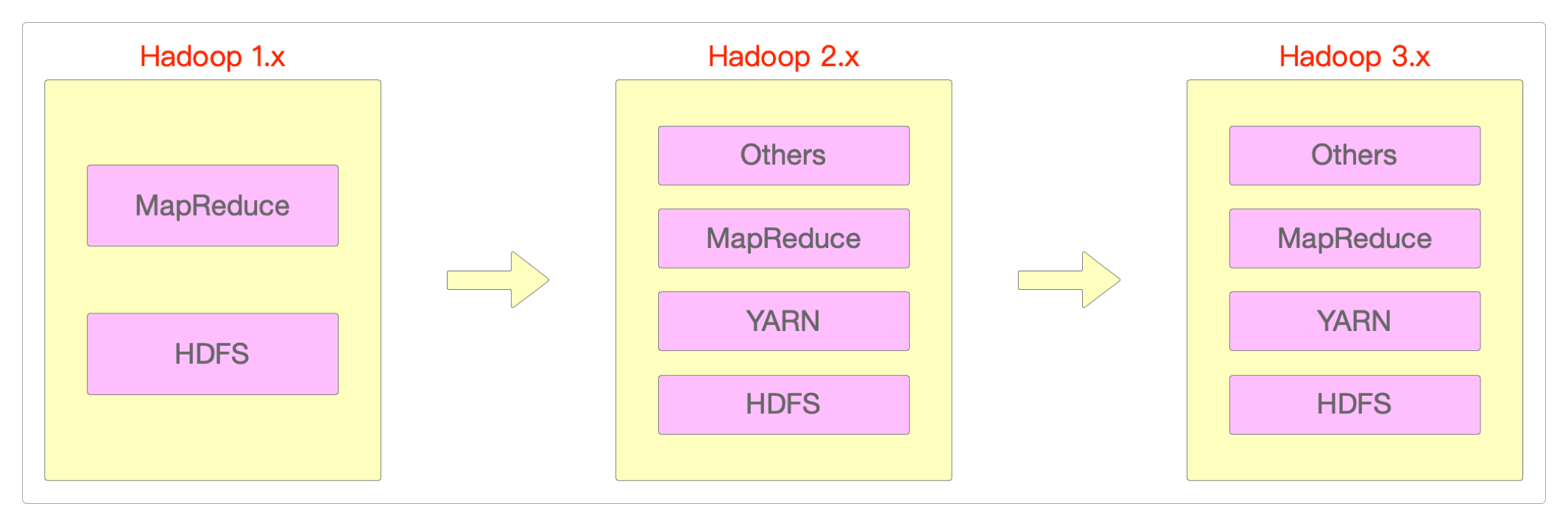

Hadoop从1.x到2.x的变化比较大,但从2.x到3.x,更多的是技术细节上的优化。

Hadoop的三大核心组件。

Docker部署

可以参考hub.docker.com上的部署指南。

- 安装好

Docker Compose,然后执行下面的docker-compose.yml文件。

version: "2"

services:

namenode:

ulimits:

nofile:

soft: 65536

hard: 65536

image: apache/hadoop:3.3.6

hostname: namenode

command: ["hdfs", "namenode"]

ports:

- 9870:9870

env_file:

- ./config

environment:

ENSURE_NAMENODE_DIR: "/usr/local/hadoop"

datanode:

ulimits:

nofile:

soft: 65536

hard: 65536

image: apache/hadoop:3.3.6

command: ["hdfs", "datanode"]

env_file:

- ./config

resourcemanager:

ulimits:

nofile:

soft: 65536

hard: 65536

image: apache/hadoop:3.3.6

hostname: resourcemanager

command: ["yarn", "resourcemanager"]

ports:

- 8088:8088

env_file:

- ./config

nodemanager:

ulimits:

nofile:

soft: 65536

hard: 65536

image: apache/hadoop:3.3.6

command: ["yarn", "nodemanager"]

env_file:

- ./config- 在

docker-compose.yml所在的目录中,创建config文件。

HADOOP_HOME=/opt/hadoop

CORE-SITE.XML_fs.default.name=hdfs://namenode

CORE-SITE.XML_fs.defaultFS=hdfs://namenode

HDFS-SITE.XML_dfs.namenode.rpc-address=namenode:9000

HDFS-SITE.XML_dfs.replication=1

MAPRED-SITE.XML_mapreduce.framework.name=yarn

MAPRED-SITE.XML_yarn.app.mapreduce.am.env=HADOOP_MAPRED_HOME=$HADOOP_HOME

MAPRED-SITE.XML_mapreduce.map.env=HADOOP_MAPRED_HOME=$HADOOP_HOME

MAPRED-SITE.XML_mapreduce.reduce.env=HADOOP_MAPRED_HOME=$HADOOP_HOME

YARN-SITE.XML_yarn.resourcemanager.hostname=resourcemanager

YARN-SITE.XML_yarn.nodemanager.pmem-check-enabled=false

YARN-SITE.XML_yarn.nodemanager.delete.debug-delay-sec=600

YARN-SITE.XML_yarn.nodemanager.vmem-check-enabled=false

YARN-SITE.XML_yarn.nodemanager.aux-services=mapreduce_shuffle

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.maximum-applications=10000

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.maximum-am-resource-percent=0.1

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.resource-calculator=org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.queues=default

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.default.capacity=100

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.default.user-limit-factor=1

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.default.maximum-capacity=100

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.default.state=RUNNING

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.default.acl_submit_applications=*

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.root.default.acl_administer_queue=*

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.node-locality-delay=40

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.queue-mappings=

CAPACITY-SCHEDULER.XML_yarn.scheduler.capacity.queue-mappings-override.enable=false本机部署

相对于使用Docker,通过本机部署Hadoop伪分布式的方式更复杂一点。

设置主机名。

> hostname hadoop

> vi /etc/hostname

# 输入主机名后保存退出

hadoop配置SSH密钥(什么都不用输入,一路回车)。

> ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:cYSh2lT8TVg7FD0bA2keihzbzHZxGrnxCiNPfqLFVg4 root@hadoop

The key's randomart image is:

+---[RSA 3072]----+

| .oo.o=* |

| o+...X.* |

| o..O.*oX = |

| + =oE B.o |

| . . SB B . |

| B + |

| + o |

| . |

| |

+----[SHA256]-----+拷贝重定向SSH公钥文件的内容。

> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys验证SSH是否可以正常登录。

> ssh hadoop

The authenticity of host 'hadoop (......)' can't be established.

ED25519 key fingerprint is SHA256:......

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'hadoop' (ED25519) to the list of known hosts.

Activate the web console with: systemctl enable --now cockpit.socket

Last login: X Y z xx:xx:xx 2024配置Java环境变量(略)。

下载并解压hadoop-3.2.0安装包。

> cd /home/work

> wget https://archive.apache.org/dist/hadoop/common/hadoop-3.2.0/hadoop-3.2.0.tar.gz

> tar -xvf hadoop-3.2.0.tar.gz配置Hadoop环境变量,在文件结尾处添加以下内容。

> vi /etc/profile

# Hadoop

export HADOOP_HOME=/home/work/hadoop-3.2.0

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH修改$HADOOP_HOME/etc/hadoop/hadoop-env.sh文件,在文件结尾处添加以下内容。

> vi /home/work/hadoop-3.2.0/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/local/java/jdk1.8.0_401

export HADOOP_LOG_DIR=/home/work/volumes/hadoop/logs修改$HADOOP_HOME/etc/hadoop/core-site.xml文件,在文件中添加以下内容。

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/work/volumes/hadoop</value>

</property>

</configuration>修改$HADOOP_HOME/etc/hadoop/hdfs-site.xml文件,在文件中添加以下内容。

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

</configuration>修改$HADOOP_HOME/etc/hadoop/mapred-site.xml文件,在文件中添加以下内容。

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>修改$HADOOP_HOME/etc/hadoop/yarn-site.xml文件,在文件中添加以下内容。

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>修改$HADOOP_HOME/etc/hadoop/workers文件,设置集群中从节点的主机名信息。

hadoop格式化HDFS(仅能执行一次)。

> cd /home/work/hadoop-3.2.0

> ./bin/hdfs namenode -format

2024-xx-xx xx:xx:xx,866 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop/172.16.185.176

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.2.0

......

2024-xx-xx xx:xx:xx,709 INFO namenode.FSImage: Allocated new BlockPoolId: BP-387136630-172.16.185.176-1720422190698

2024-xx-xx xx:xx:xx,725 INFO common.Storage: Storage directory /home/work/hadoop/dfs/name has been successfully formatted.

2024-xx-xx xx:xx:xx,740 INFO namenode.FSImageFormatProtobuf: Saving image file /home/work/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2024-xx-xx xx:xx:xx,861 INFO namenode.FSImageFormatProtobuf: Image file /home/work/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

2024-xx-xx xx:xx:xx,885 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2024-xx-xx xx:xx:xx,897 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop/172.16.185.176

************************************************************/如果格式化失败或者需要重新格式化,那么可以将在core-site.xml文件中设置的<hadoop.tmp.dir>目录下的文件清空,然后重新执行。

修改$HADOOP_HOME/sbin目录下的start-dfs.sh和stop-dfs.sh文件,在它们的开头处添加和下面一样的内容。

> cd /home/work/hadoop-3.2.0

> vi ./sbin/start-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

> vi ./sbin/stop-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root修改$HADOOP_HOME/sbin目录下的start-yarn.sh和stop-yarn.sh文件,在它们的开头处添加和下面一样的内容。

> cd /home/work/hadoop-3.2.0

> vi ./sbin/start-yarn.sh

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

> vi ./sbin/stop-yarn.sh

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root启动Hadoop伪分布式集群。

> cd /home/work/hadoop-3.2.0

> ./sbin/start-all.sh

Starting namenodes on [hadoop]

Starting datanodes

Starting secondary namenodes [hadoop]

Starting resourcemanager

Starting nodemanagers查看进程,验证Hadoop是否启动成功。

> jps

20865 SecondaryNameNode

21122 ResourceManager

20649 DataNode

20474 NameNode

21693 Jps

21278 NodeManager感谢支持

更多内容,请移步《超级个体》。